Data processing done by Edge Computing

A distributed computing system called edge computing puts business applications closer to data sources like IoT gadgets or regional edge servers. Strong business advantages can result from being close to the data's origin, including quicker insights, quicker turnaround times, and better bandwidth availability. This should reduce bandwidth usage and speed up response times. It is not a particular technology, but an architecture. It is a type of distributed computing that is topology- and location-sensitive. The gadget on your wrist and the computers analyzing intersection traffic flow are just two examples of how edge computing is already in use all around us. Other applications include agricultural management using drones, smart utility grid analysis, safety monitoring of oil rigs, and streaming video optimization. Edge computing optimizes web apps and Internet gadgets by moving to process near to the data source. As a result, there is less need for clients and servers to communicate over long distances, which lowers latency and bandwidth utilization. A network receives real-time data from sensors and devices at the Internet of Things (IoT) edge. Since data is processed closer to its point of origin using IoT edge computing, latency problems with the cloud are resolved. While cloud computing is used to handle data that is not time-driven, edge computing is used to process data that is. In remote areas with poor or no connectivity to a centralized location, edge computing is chosen over cloud computing in addition to delay.

Edge Computing Origins

Brian Noble, a computer scientist, demonstrated in 1997 how edge computing for mobile technology may be used for voice recognition. This technique was utilized to increase the battery life of mobile phones two years later. In the 1990s, Akamai established its content delivery network (CDN), which introduced nodes in locations geographically closer to the end-user, and this is when edge computing first gained traction. Cached static content, like images and videos, is kept in these nodes. By enabling nodes to carry out fundamental computational operations, edge computing expands on this idea. Brian Noble, a computer scientist, demonstrated in 1997 how edge computing for mobile technology may be used for voice recognition. This technique was also utilized to increase the battery life of mobile phones two years later. This approach, which is essentially how Apple's Siri and Google's speech recognition services operate, was known at the time as "cyber foraging." Edge computing's dispersed nature implies that in addition to lowering latency, it also increases resilience, lowers networking burden, and makes scaling easier. Data processing begins at the point of origin. Only the data that needs additional analysis or other services once initial processing is finished needs to be transmitted. This lessens the need for networking and the chance of bottlenecks at any centralized services. Furthermore, you can hide outages and increase the resilience of your system by using other adjacent edge locations or the capability for storing data on the device. As a result of managing less traffic, your centralized services don't need to be scaled as much. Costs, the complexity of the design, and management may all be reduced as a result.

Edge & Cloud Computing: Should they Replace or Coexist?

We are currently experiencing an exponential expansion in IT, as well as an increase in the availability and overall volume of data, which is the primary cause of all of this. This is what first sparked the entire cloud computing concept since there was simply too much data to keep or analyze locally. Edge computing and cloud computing can have a complicated relationship. Here's a look at whether edge computing will finally replace the cloud or whether something more along the lines of cohabitation will occur. It may seem strange why any new paradigm is even required given how potent, successful, and widely used cloud computing appears to be. This raises the question of just what in the cloud computing strategy was deficient enough to make room for a different method for carrying out tasks that fall outside of its ambit. Since there will probably still be a need for centralized processing for a while, it can't replace cloud computing. Instead, edge computing was developed as a response to the drawbacks of cloud computing. Edge computing, which is the processing that takes place at the edge of networks, is extremely helpful in a variety of contexts, most notably in some aspects of the Internet of Things. This is a very pertinent subject when we're thinking about edge computing, given how quickly the Internet of Things is developing right now and the big predictions for how essential it will be in the future.

What edge computing implies for businesses in the future?

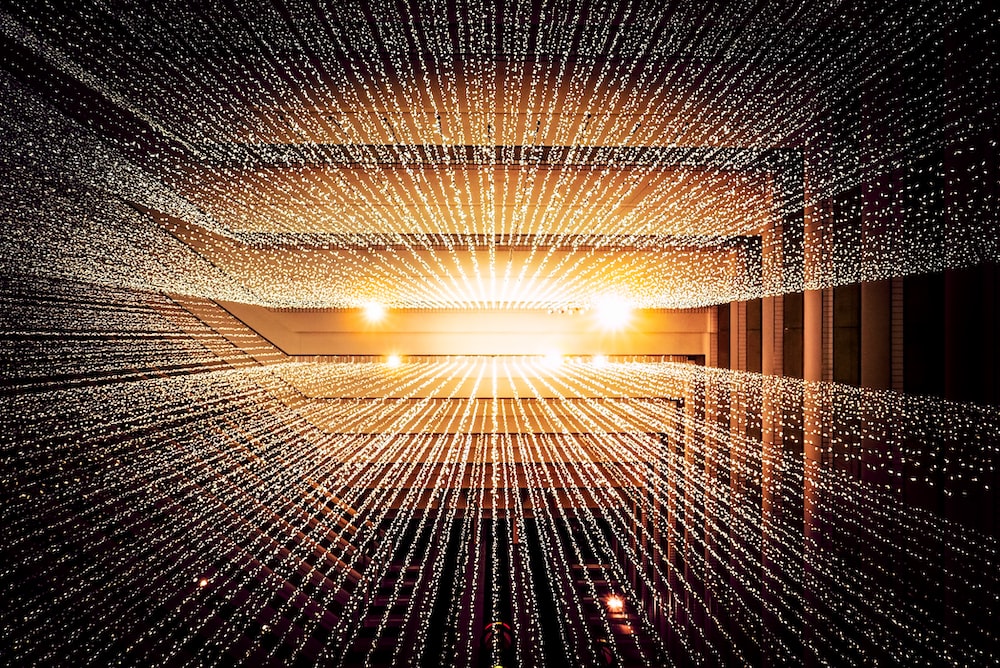

Edge computing is being used by convergent technologies to transform our daily lives and the way we conduct business. Edge computing refers to more compact and numerous data hubs that are physically closer to the user—that is, closer to where people use their gadgets. We are drowning in a sea of data because of the way the world operates these days, where new technologies and applications are continuously being developed. Sending it to a data warehouse that is already overburdened can take time; we're not talking about days; more like your Netflix program lagging continually or your internet taking a while to download things. It irritates me. But it can also be risky, for example, in the area of medicine or fast transportation. Response times will be quicker and less bandwidth will be utilized as edge computing grows over time, albeit it will take some time to establish the infrastructure. For problems with processing real-time data, edge computing is ideal. In comparison to a massive data center located miles away from the network's center, computing resources at the "edge" of the network may respond much more quickly. Better response and transfer rates are obtained when servers are constructed at the edge. Again, we're talking about extremely small amounts of time, but each zeptosecond matters. In plain English, edge computing minimizes the amount of data that must be transported and the distance it must travel. As a result, there is less traffic, which results in a faster data flow, lower latency (the time it takes until your command is carried out), and cheaper transmission costs. Edge computing is the future, especially in light of 5G, which in essence means faster data transmission.